Rookie Website Mistakes, Part 2: Not Allowing Google to See Your Website

August 17th, 2017 by

Congrats, you’ve finally created a website for your business! And in the last post in our Rookie Website Mistakes blog series, you even learned how to bring your site up to speed. Now’s the part where you pat yourself on the back and start thinking about how to work your way to the top of the first page in the search results.

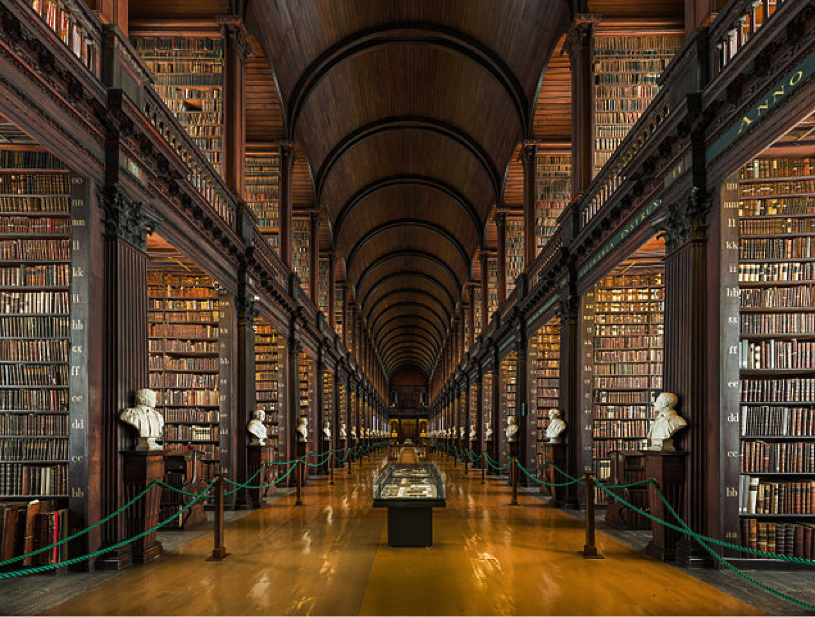

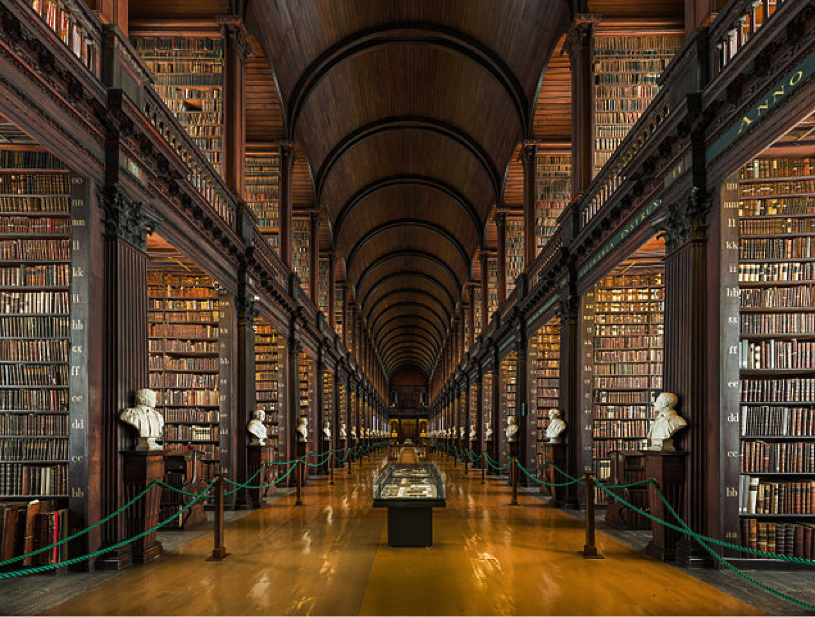

Well, hold your horses—Google’s really smart, but it’s not magic. Think of the search engine results pages (SERPs) as an insanely big library: if you’ve written a novel, the book doesn’t automatically appear in the correct place on the correct library shelf. You’d have to get someone to read it, decide what it’s about, and figure out how to catalog it somewhere in the existing shelves. In the same way, Google needs to actually “read” your website before it can make a decision about where it fits in in the SERPs.

And if you’ve accidentally prevented Google from reading your site at all, it has no way to fit it into its library. As a result, the “if you build it, they will come” mindset definitely doesn’t apply: potential customers won’t find you if your site isn’t showing up when they search for it. Failing to allow search engines to actually find your website is a very common rookie mistake when moving WordPress sites from staging to a live server, but a little knowledge of how Google works can help bring your site up to speed.

How Does Google Find and “Read” Your Website?

According to Verisign‘s Domain Name Industry Brief, there were approximately 330.6 million domain name registrations in the first quarter of this year alone. Google’s a huge company, but its employees don’t have time to read all of these new sites. Instead, they entrust that task to specialized bots, called “spiders,” “crawlers,” or “Googlebot,” that automatically discover your website and analyze its contents.

These bots “crawl” websites by moving through one link to the next, bringing back information for the search engines. Google also uses these spiders to analyze updates to your site, but since you’re just starting out in the website ownership process, let’s focus on site discovery. “Indexing” is the process of discovering your site and deciding where it fits into Google’s library. A page has been indexed when Googlebot’s results have been recorded in Google’s index, or the massive database of the search engine, allowing the page to actually show up in the SERPs.

One interesting thing to point out is that the terms “crawl” and “index” are related but not necessarily dependent on each other. In other words, just because a bot has visited a page doesn’t necessarily mean that the page has been indexed. Similarly, it’s possible for a page to be indexed without ever having been crawled (in rare cases).

What Happens When Googlebot Is Blocked?

If Googlebot is unable to see what’s on your site, it means that no crawling and indexing is happening. This, in turn, might mean a loss of rankings in the SERPs.

There are a few ways your site might be blocking Googlebot:

- Firewalls – Using a firewall or DoS protection system is always best practice, but your systems might be recognizing Googlebot as a potential threat. Because Googlebot tends to make far more server requests than your average human user, the firewall on your site might flag this as threatening behavior, preventing the bots from crawling your website.

- Intentional blocking – The webmaster of your site might intentionally block Googlebot in an attempt to control how the site is crawled and indexed.

- DNS issues – Your DNS provider may be inadvertently blocking bots.

How Do You Ensure That You Aren’t Telling Helpful Bots to “Get Lost”?

Here are a few things to double check to be sure you’re putting out the welcome mat for your helpful crawlers.

First things first: check for noindex meta tags, which basically tell bots “thanks, but no thanks.” It seems obvious, but removing a line of code may be all you need to do to get indexed.

Next, check your robots.txt file. It’s not a mistake to use this file at the root of your site; when used effectively, it allows you to give bots exact direction as to which pages you want crawled and which bots you want to access your site. However, if you’re using it incorrectly, you might be blocking Googlebot from seeing your site at all. You can learn more about robots.txt here, and Google offers plenty of additional information to help you understand how to use robots.txt files effectively with Googlebot.

You might also want to configure your URL parameters through Google Webmaster Tools to control Googlebot’s access without a loss of search ranking.

Finally, if a firewall or any kind of bot blocking script is the culprit, you’ll need to manually remove the block. For DNS issues you can’t fix on your own, try contacting your DNS provider.

How Long Does it Take Google to Crawl Your Site?

Note that even after making these changes, you’ll need to give Google a few days (at least) to index your site—it won’t happen instantly. And once you’ve made sure Google is able to access your site for crawling and indexing, you can move forward by optimizing your website for spiders to make it easier to crawl. If you need help with that next step, our team would be happy to get you on the right track. Contact an expert from our team anytime.

Stay tuned for our next entry in our blog series: Rookie Website Mistakes, Part 3: Your Site Isn’t Mobile Friendly.

Images: